To use this site, please enable javascript

To use this site, please enable javascript

It has been possible to advance AI thanks to factors like increased access to massive computing power. In the same way, the increase in automation, which AI facilitates, advances the development of unmanned, autonomous hydrographic operations. This is an exciting prospect, as the advantages of such operations are many: efficiency gains, reduction in costs in the form of vessel time and man hours, as well as the minimisation of environmental impacts.

At EIVA, we are working towards autonomous hydrographic operations by developing software tools, which provide automatic, real-time data processing and navigation-aiding. In 2017, we established a dedicated software development team with engineers specialising in machine learning, machine vision and deep learning. The first official EIVA software version utilising AI was released in 2018-2019, when we made it possible to use NaviSuite Deep Learning for automatic interpretation of data in NaviSuite Nardoa, our software bundle for pipeline inspections.

In this article, we will dive into how we are using machine learning to create software solutions that support autonomous hydrographic operations. We provide examples from projects we are working on – in which we test the waters of autonomous operations.

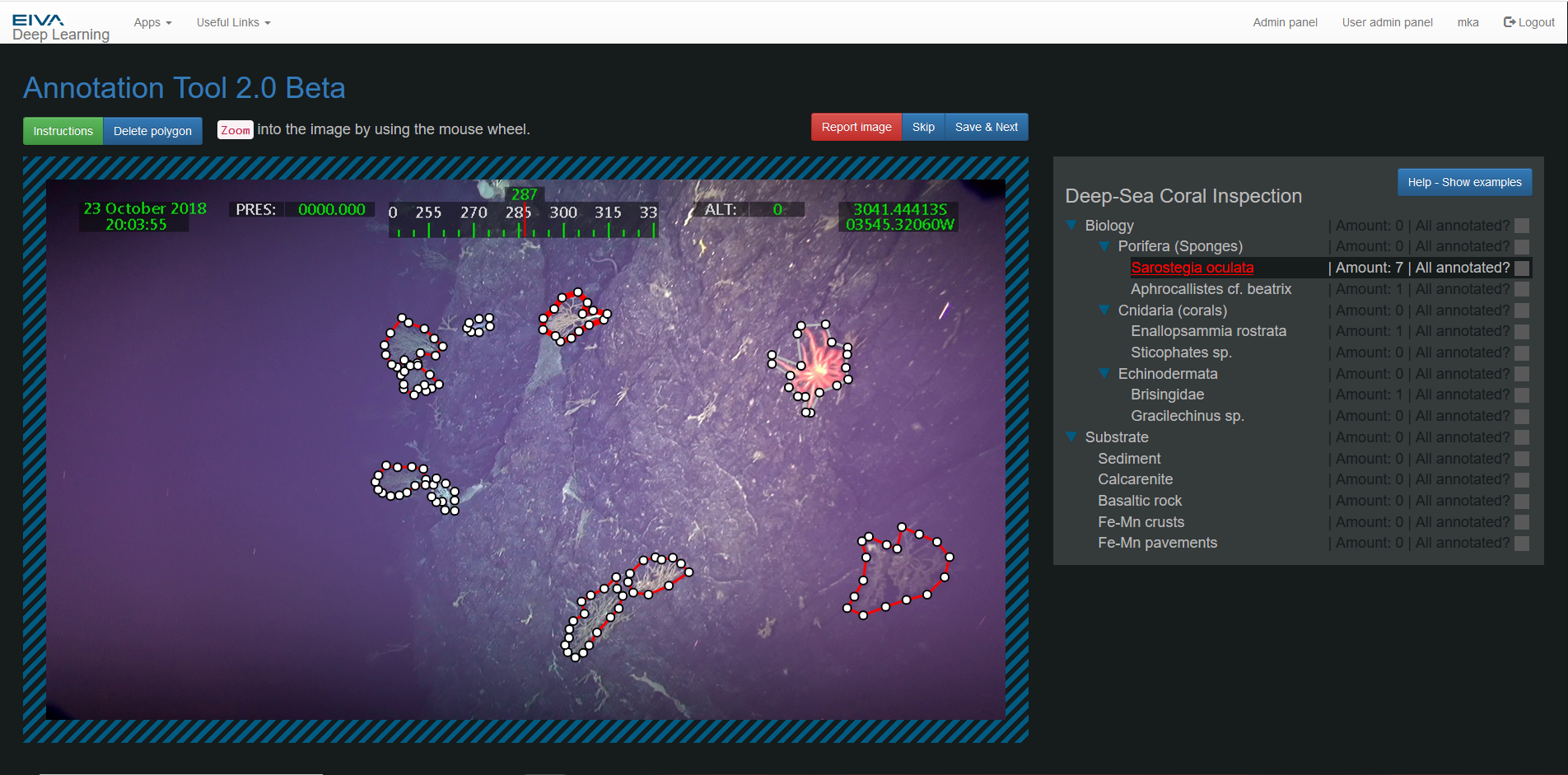

NaviSuite Deep Learning is a software tool which can identify objects from images. It is based on a neural network model, which is trained on thousands of images from various providers, typically NaviSuite users. To train the network, a human must manually mark objects of interest in images using our Annotation Tool. Objects can be anything from a pipeline joint to a particular species of coral.

Several coral species are marked on an image, using the NaviSuite Deep Learning Annotation Tool

In a project with São Paolo University, we are training NaviSuite Deep Learning to identify coral species and their habitats. Currently, an expert from the university is marking the various coral species in images to teach the neural network to identify them. Once developed, this NaviSuite Deep Learning capability may be used for monitoring and maintaining sustainable marine ecosystem services.

We are in the process of planning another application: monitoring mussel farms to ensure they are operating optimally. This is for a developmental project in partnership with Wittrup Seafood, WSP and several others, and with the support of the Danish ministry of environment and food. So far, we have designed a set up to perform surveys of mussel farms, and the next step is to train NaviSuite Deep Learning to identify important factors, such as how many mussels are growing in different locations, whether they are sick or if there are pests, such as starfish, on them.

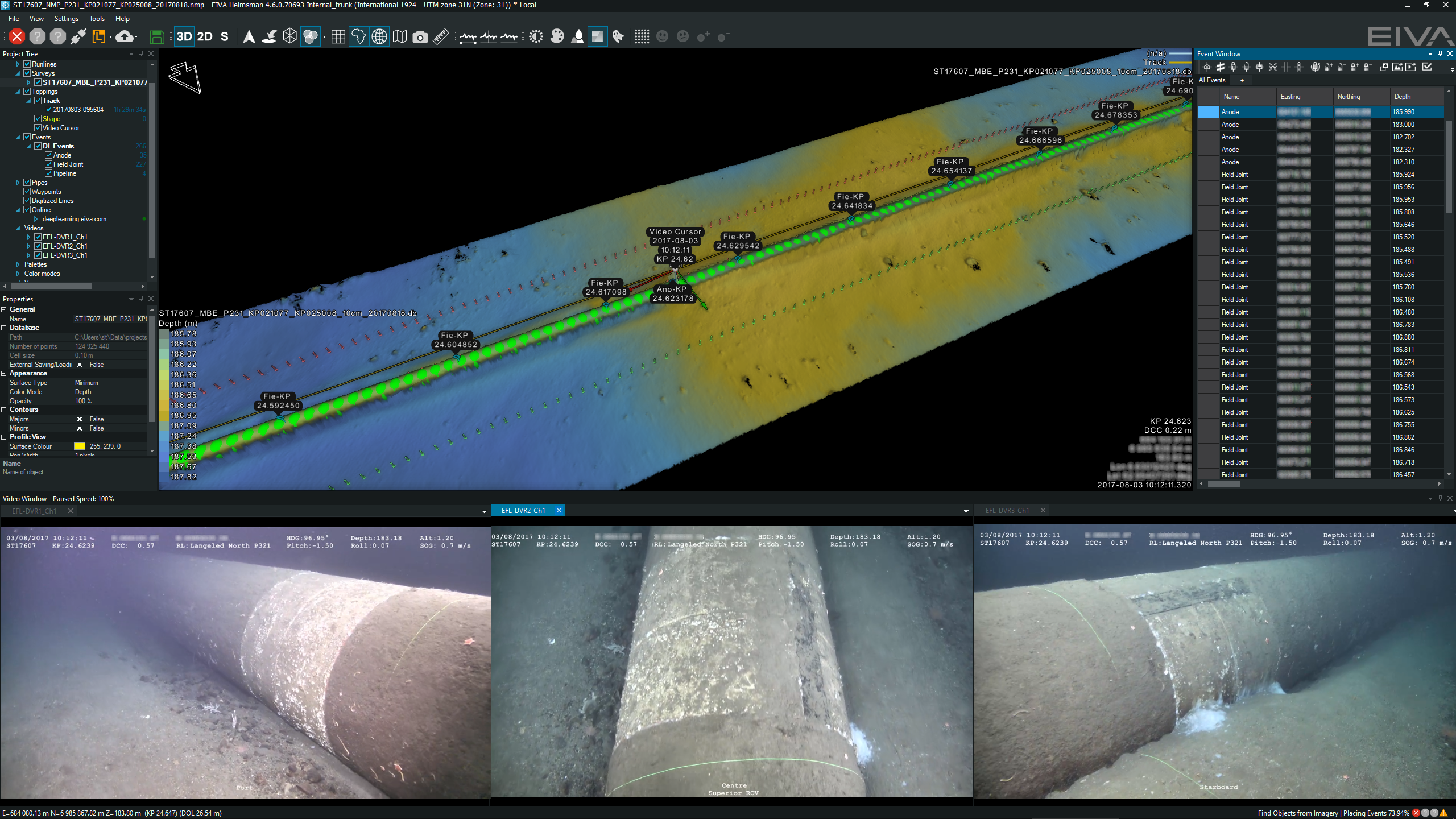

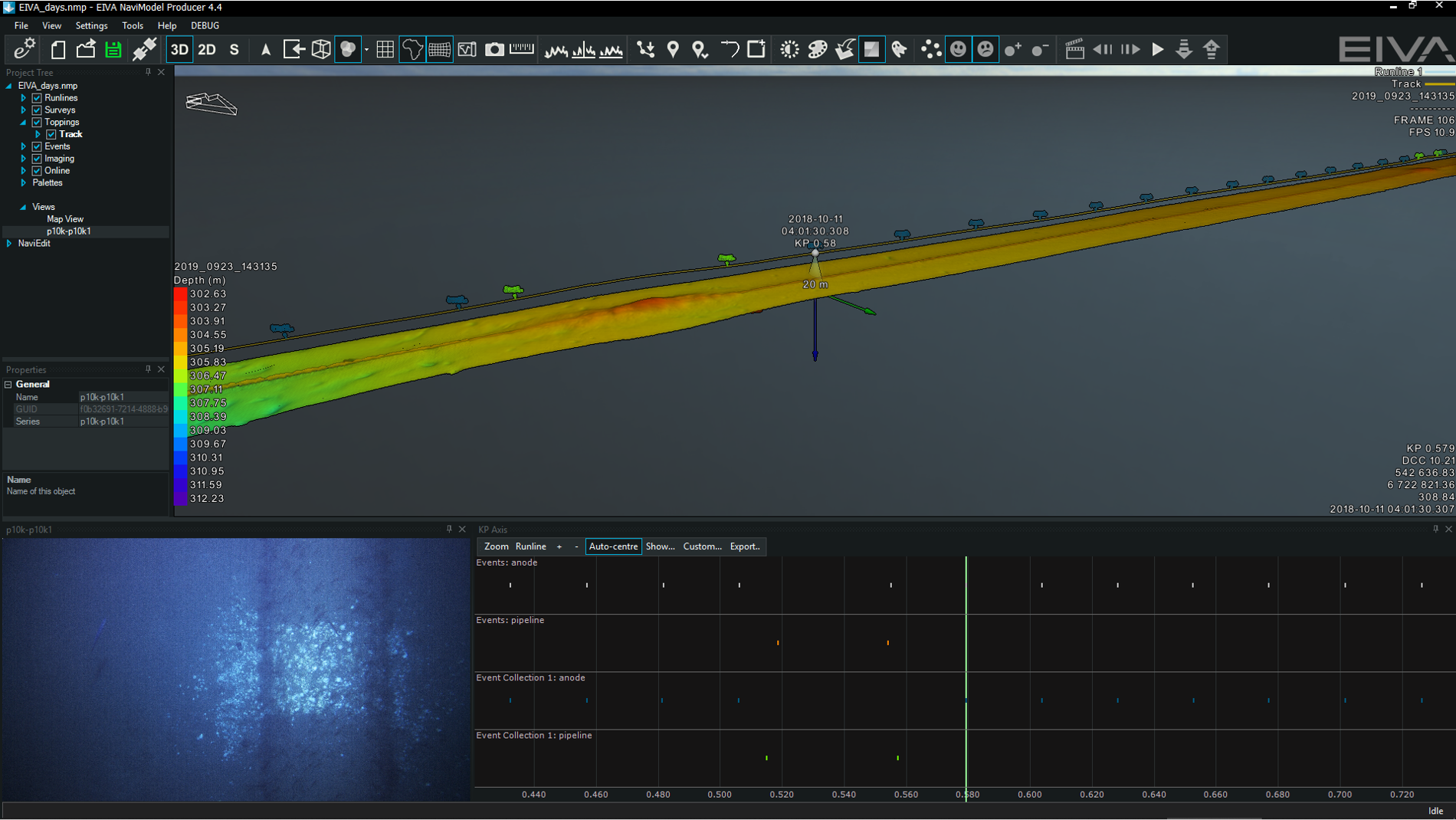

It takes a lot of time and data to teach a neural network to recognise such complex objects. During the development of NaviSuite Deep Learning for its first application: pipeline inspections, it has been possible for us to train it to identify over 20 different events which occur along a pipeline. For example, it can mark pipe visibility, anodes, field joints, debris, marine life, damage and more. This has been possible thanks to the help of our customers, who have shared data of their pipelines.

There are three ways to incorporate NaviSuite Deep Learning into your operations. Firstly, it can be used on a cloud service hosted by EIVA, which is typically a good solution for onshore data processing staff. Secondly, it can be used on a rack server, useful for processing onboard a vessel. Finally, it can be used on an onboard computer, which can be integrated into an AUV or USV. The onboard computer is ideal for autonomous operations, as this makes it possible to let the AUV or USV change mission based on objects detected via NaviSuite Deep Learning.

When using NaviSuite Deep Learning, it is incorporated into NaviSuite software. This means that when it identifies objects of interest, it can systematically organise this information for ease of use.

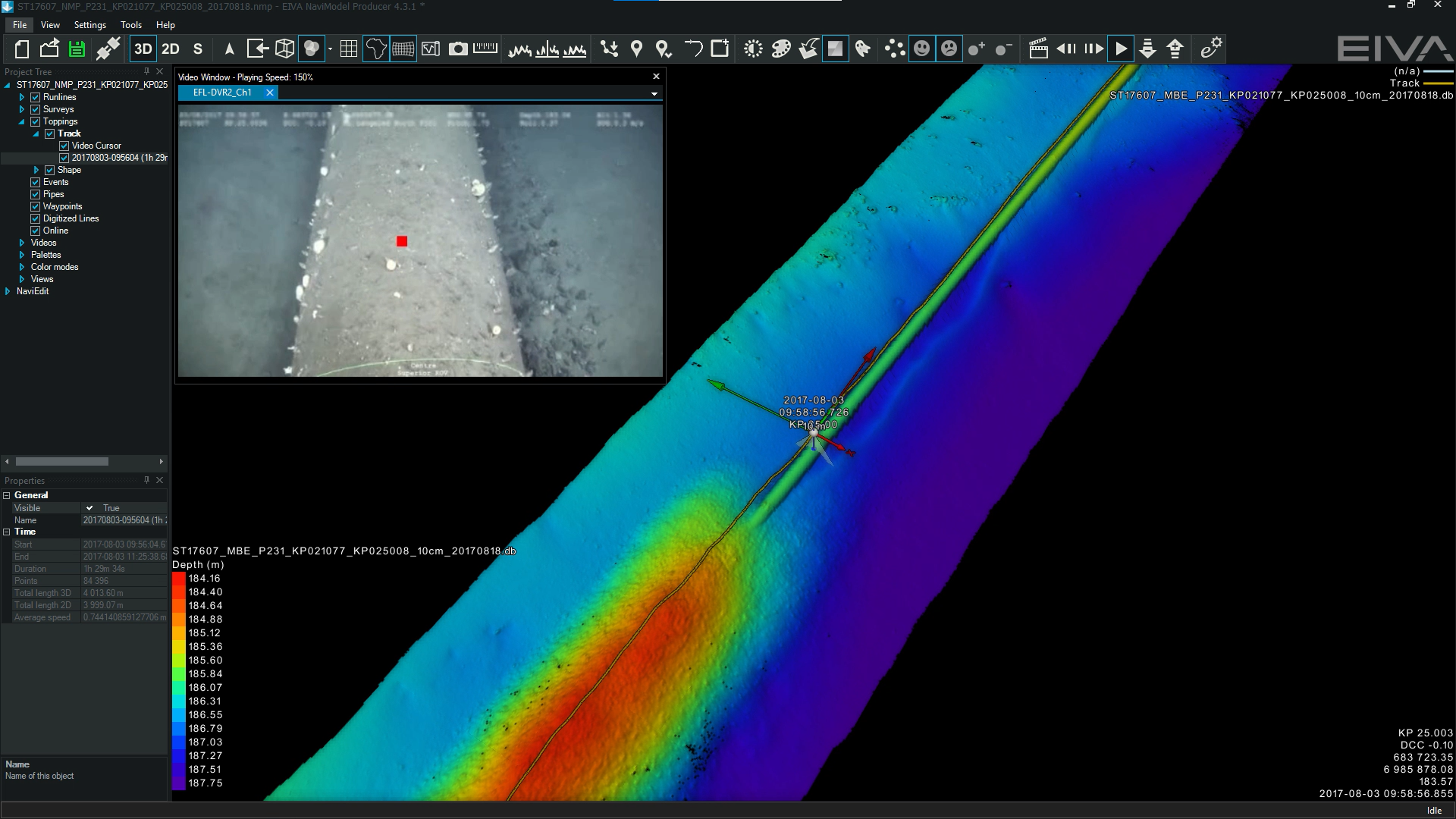

Events marked along 3D model of pipeline, with video views of an anode shown at the bottom. In the upper right, you can see the full list of events.

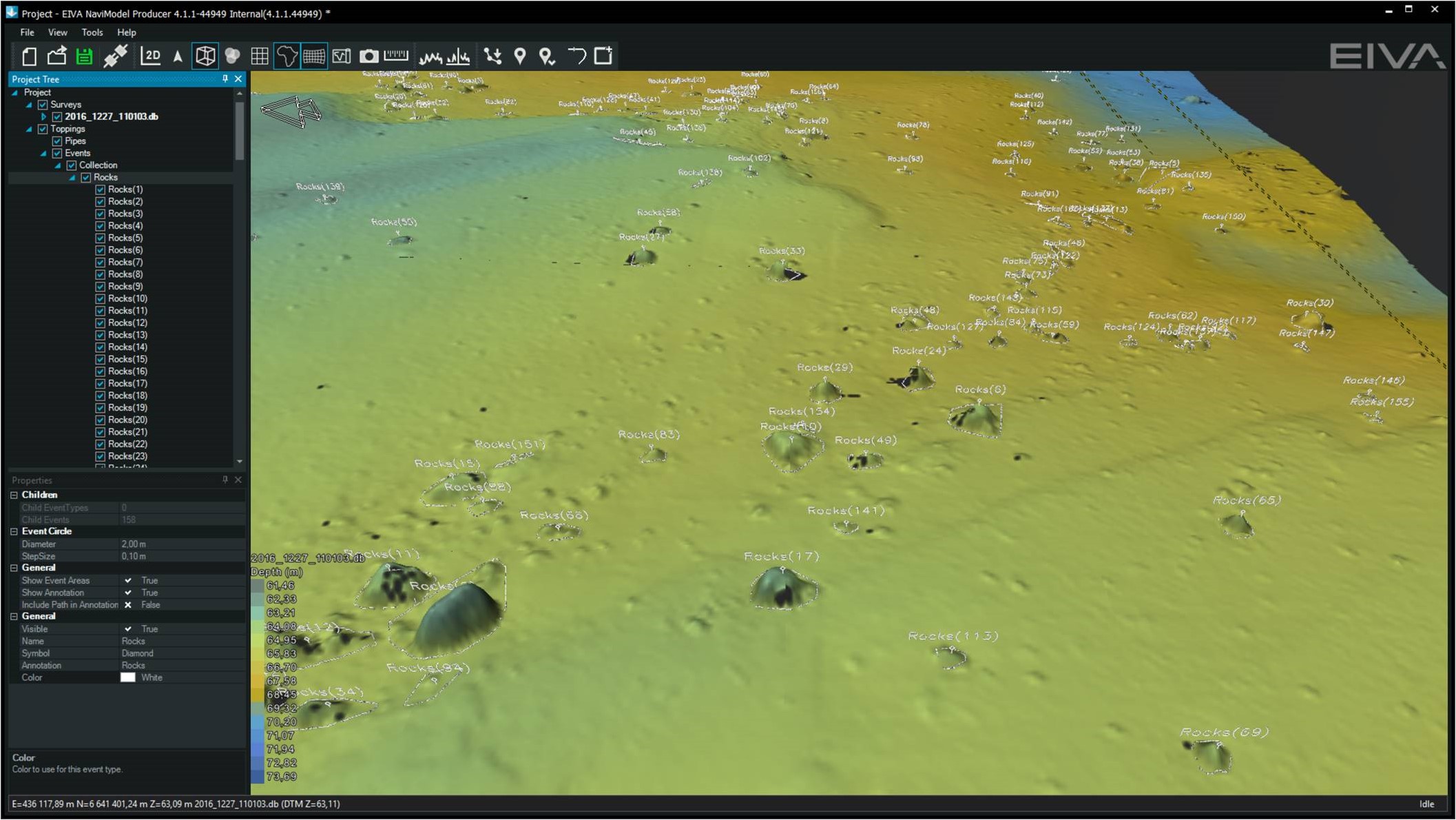

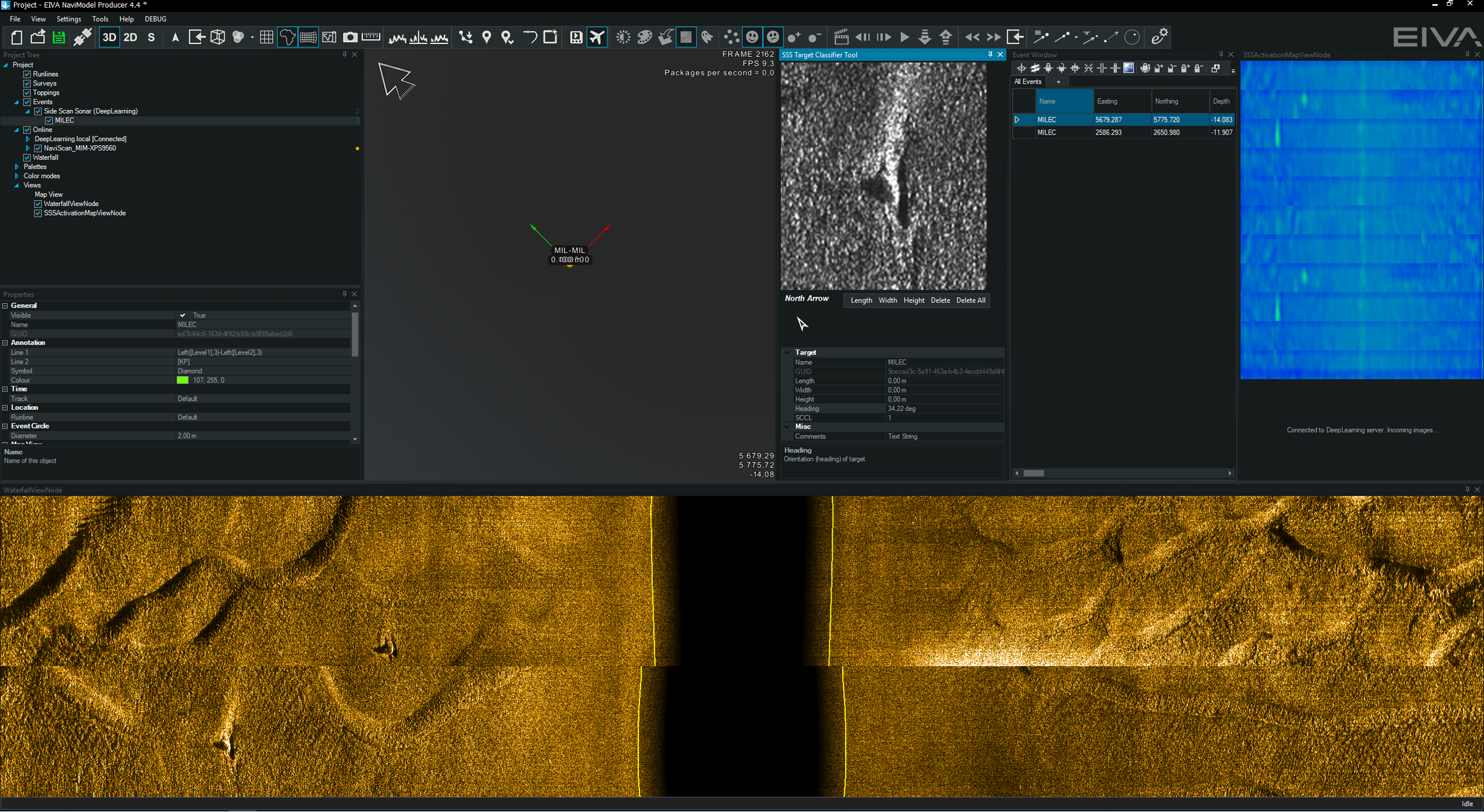

In addition to NaviSuite Deep Learning, we have developed other software tools for identifying targets on the seabed, such as rock/boulders, debris and man-made objects. Identifying such objects is vital when planning construction on the seabed or removing abandoned fishing gear, which harms marine life if left in the waters. The tools can be used in NaviModel, our software solution for data modelling and visualisation. Objects identified with these tools can be automatically compiled in an intuitive overview, along with information, such as their type and location.

The Find Object tool tags boulders on the seabed in Digital Terrain Models (DTM) based on their slope

The Automatic Target Recognition (ATR) tool uses a variety of NaviModel functions to identify objects – in addition to DTMs, it can be used on sidescan sonar data, as shown here, where two objects have been automatically registered as events, as shown in the event window (upper right)

In the future, NaviModel will not only be able to automatically register targets on the seabed, but also register the seabed type. Together with several customers, we are developing a tool which uses backscatter data to segment areas of the seabed based on their material type, for example clay, gravel, sand or rock. These different materials reflect sound different intensity, which can be seen and detected automatically in the backscatter data. This is yet another way we are developing software to make the most of available data and support subsea operations.

With the ability to interpret data automatically, the next step is to use that information to automate navigation and survey planning. NaviPac is our software solution for positioning and navigation of surface and subsea vessels.

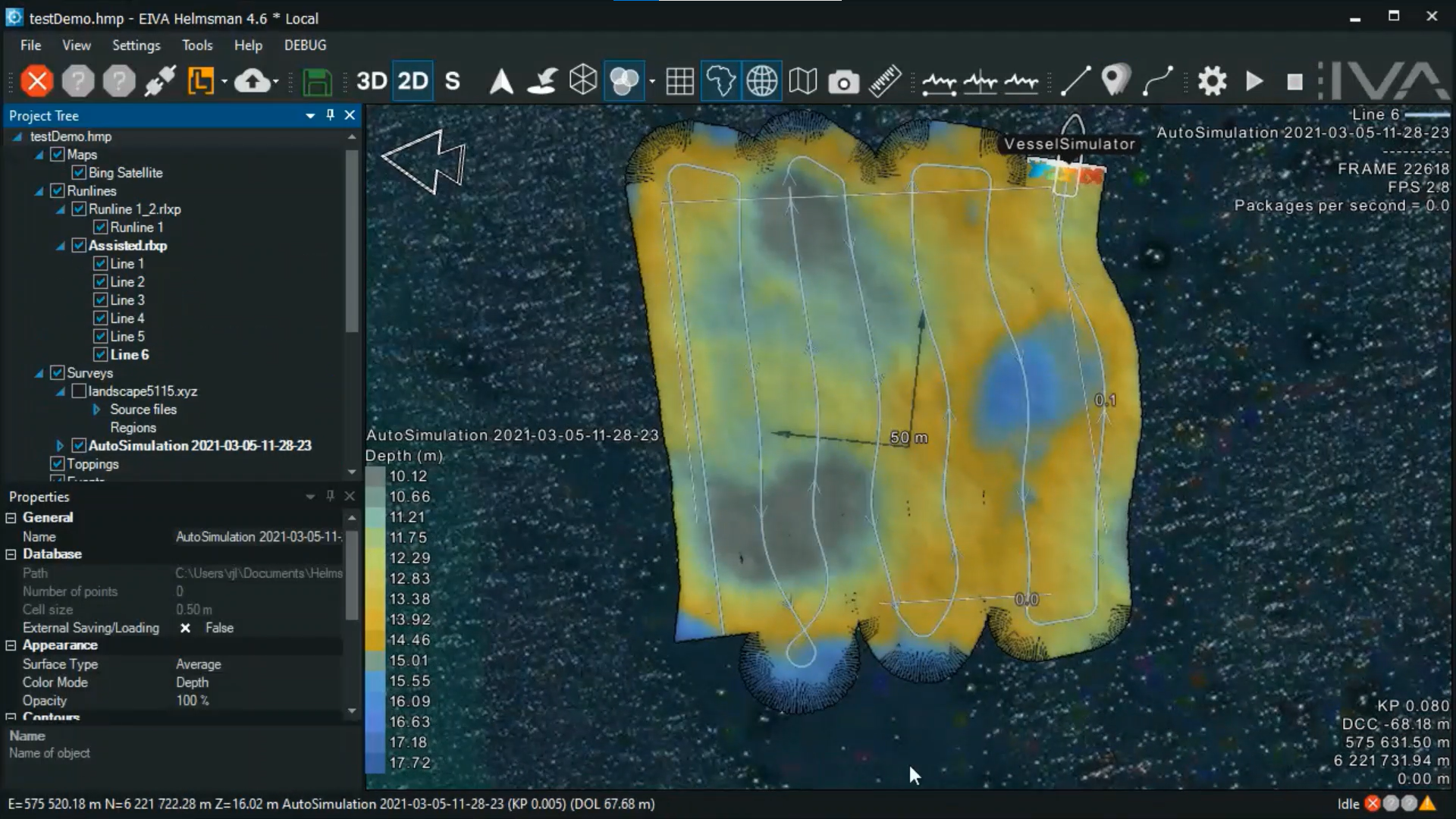

NaviPac currently facilitates automatic navigation through the Coverage Assist tool. This tool automatically plans navigation runlines in real-time based on the detected depth, to ensure optimal coverage when scanning an area. The operator can simply define the area to be scanned, as well as the first runline. Then, Coverage Assist creates the most efficient route one runline at a time. It does so by designing the shortest route to turn, and by following the outer limit of bathymetry data collected on the previous line.

In its calculations, Coverage Assist considers the vessel’s turn capabilities, as well as custom coverage and density requirements. In other words, it designs the optimal route for that specific vessel, with that survey’s specific requirements, and in that way adapts to ensure full area coverage.

A demonstration of the runlines and scan results of a survey completed using the Coverage Assist tool

The Coverage Assist tool excels at automatically surveying a fixed area. However, surveying an area which could be positioned differently than expected, such as pipelines or a mussel net, requires more navigation-aiding. For this, we can make good use of the automatic, real-time data analysis described in the previous section.

Currently, we are developing several methods for autonomous underwater navigation aiding for a variety of applications. What these methods all have in common is that they provide automatic positioning by tracking objects or structures. We are also working on using Visual SLAM (simultaneous localisation and mapping) to provide real-time information as an input for future autonomous navigation.

When you wish to navigate with respect to an object of interest, such as a pipeline, the first step is to be able to identify it. We have now covered the ways we use machine learning to teach the computer to automatically identify an object in the previous section – in the following we will dive into how to use this for navigation with respect to such objects.

In terms of navigation methods, we have made most progress in developing methods for navigating along a pipeline, as this was our first application for NaviSuite Deep Learning. We have developed two methods, based on sonar and video data. In both, the idea is to track the top of pipe (TOP) and navigate by following it.

The visual pipetracker navigation method involves calculating the TOP, which is marked here with a red square

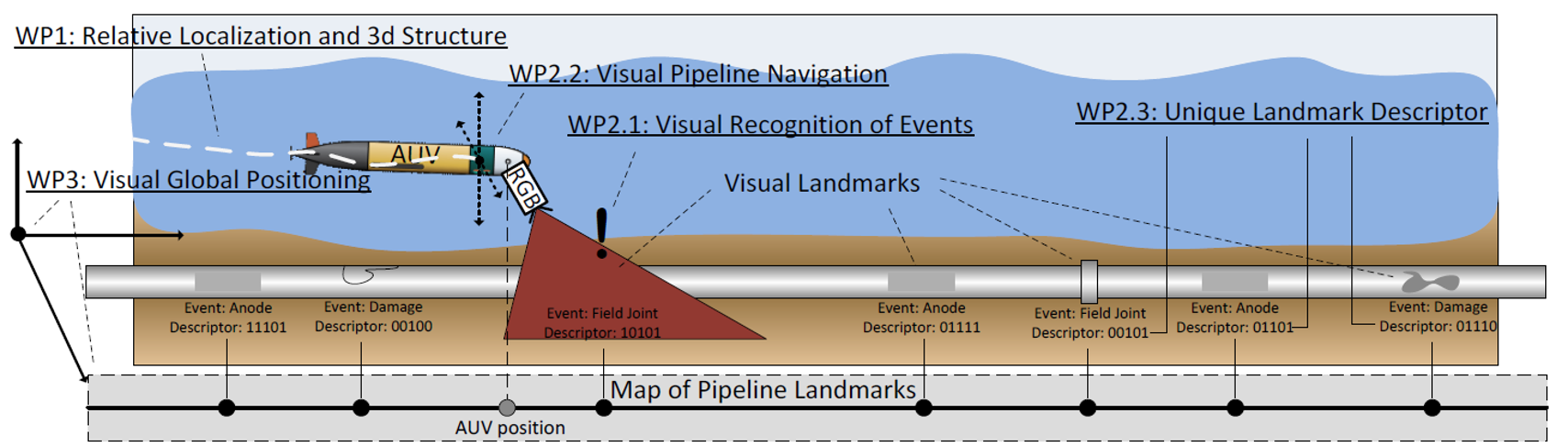

While it is great to be able to follow an object of interest, it is often necessary to know where you are in the world, so that object is mapped in terms of its global location. Just as you may see street names and buildings you recognise, there are landmarks under sea, which can help you navigate. These landmarks can be recognised automatically using NaviSuite Deep Learning, and then used to position your survey platform. This provides a more dynamic way to perform automatic navigation.

When looking for landmarks, there are some objects under water, which we can use because we know their precise global location. These can for example be shipwrecks or points where two pipelines cross each other. Of course, we can also create such underwater landmarks ourselves if we wish, by placing a marker, or marking the position of a natural feature, such as a boulder with a recognisable shape.

An illustration of how an AUV can be positioned based on landmarks along a pipeline

There are also structures which tell us where we are relative to somewhere else. Similar to street intersections, along a pipeline there are field joints and anodes at certain intervals. By knowing the distance between these events, we have one more piece of information to map our location and speed.

NaviSuite Deep Learning recognition of anodes is used to track the position of the pipeline

The object you wish to navigate with respect to may be in the water, rather than on the seabed. In this case, it is advantageous to use a Forward-Looking Sonar (FLS). FLS is often used for obstacle avoidance, but also excels at aiding navigation, especially when you wish to approach an object without colliding with it. A useful ability, since objects floating in water rarely stay in place!

One application, in which we are using FLS, is a setup for mussel farms. FLS is used to help navigation along mussel nets while performing video surveys of the mussels. This allows us to record a video – without colliding with the net and harming the mussels. The video can then be analysed by NaviSuite Deep Learning, which will be taught to recognise important characteristics of mussel farms, such as the amount of mussels, or occurrences of disease.

Another application in which FLS comes in handy is AUV recovery. In addition to using FLS to ‘see’ the AUV, we use machine learning to track its relative location. This makes it possible to navigate towards it precisely in order to securely capture it. If the water is clear, video can also be used to track the relative location of the AUV. Both with FLS and video, the method involves using machine learning to recognise the object of interest, and then navigating with respect to its relative position.

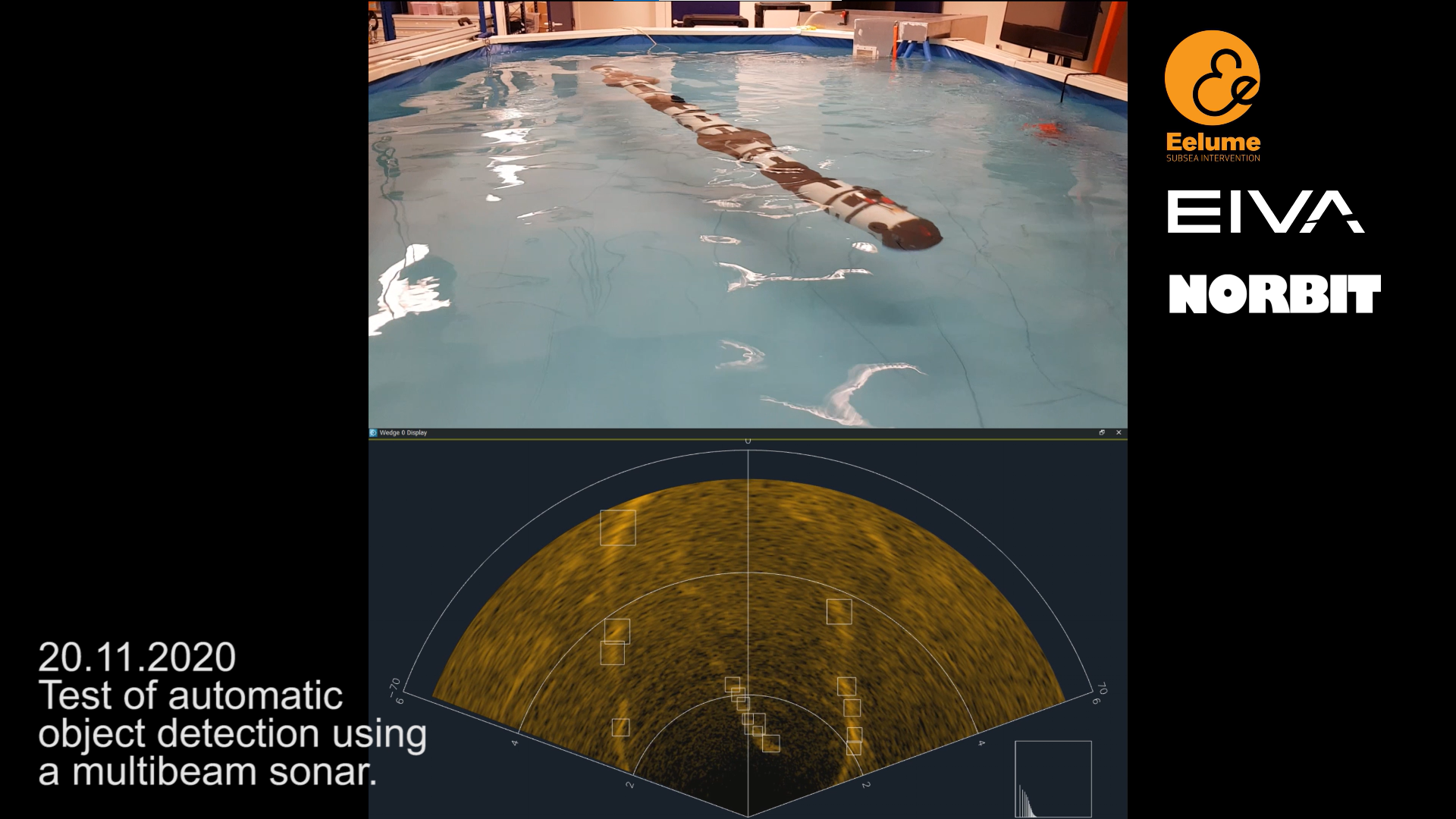

In the bottom screen, you can see the FLS view of the pool with the Eelume AUV in it – the squares show where NaviSuite software uses machine learning to automatically register objects, namely the AUV in the center, as well as interference from the sides of the pool

Capitalising on recent advances in computer vision, we are developing a software tool which can create 3D reconstructions from images or videos. To achieve this, we use a combination of Visual Simultaneous Localisation and Mapping (VSLAM) and machine learning. This tool has great potential to support autonomous navigation, as it can both map the surroundings of a vehicle, as well as provide information about the vehicle’s positioning.

Instead of requiring a specific hardware setup, our final goal is to create a VSLAM software tool designed to utilise all available equipment. With the right equipment, such as a combination of cameras and a positioning sensor, it can actually achieve higher resolution than sonar data. For these reasons, we expect VSLAM to play an essential role in the future of autonomous hydrographic operations in clear water.

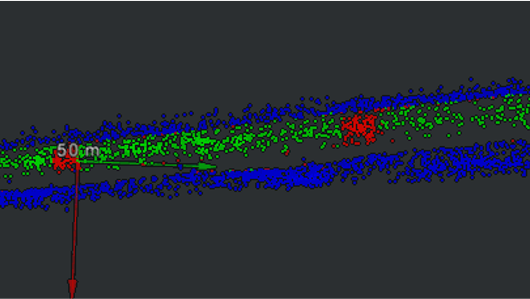

A sparse point cloud of a pipeline, created using VSLAM and coloured using analysis by NaviSuite Deep Learning – the green points are the pipeline, the red are anodes and blue shows the sea bottom

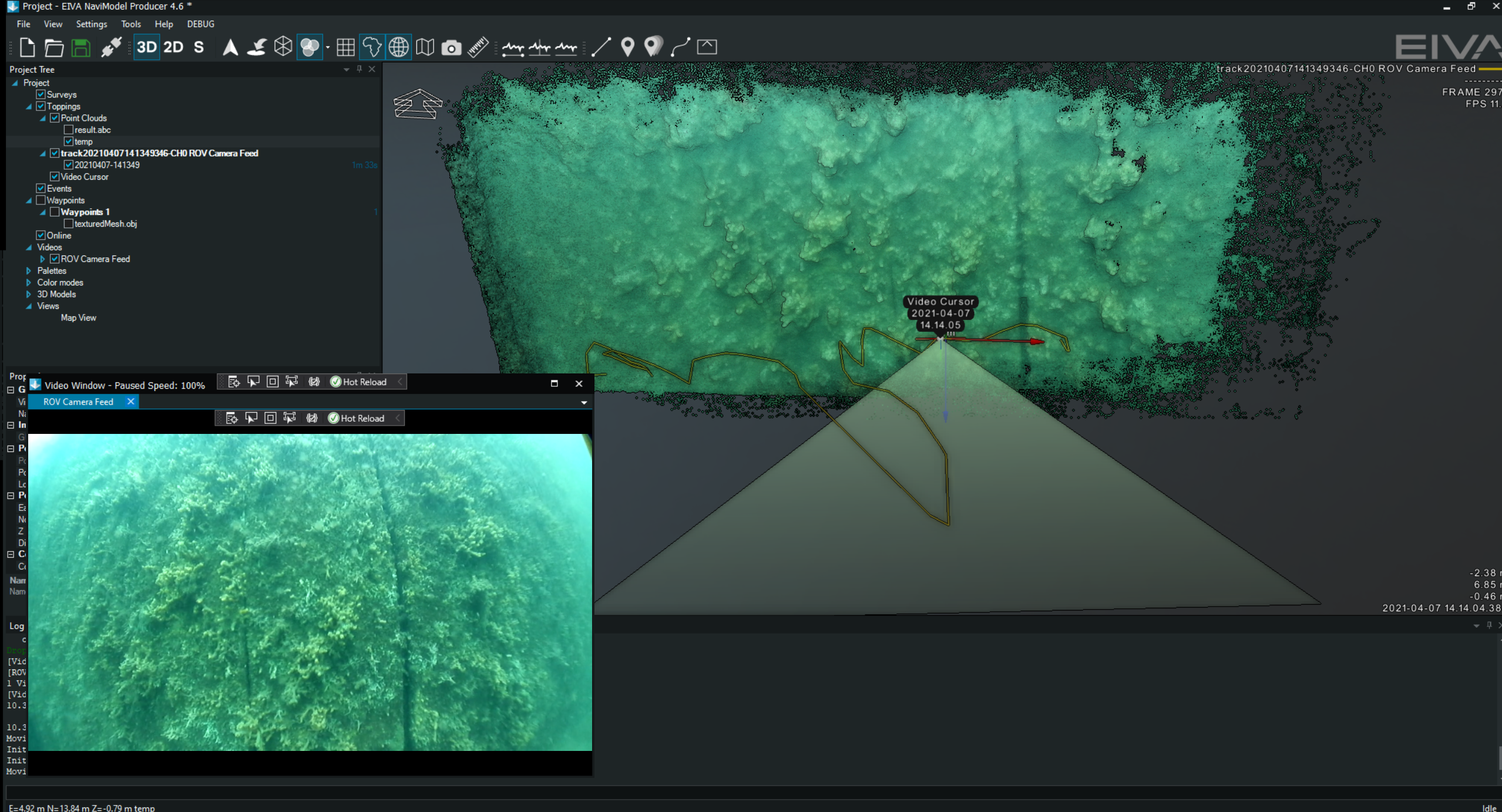

A dense point cloud showing a section of harbour wall – created with VSLAM based on video data from an ROV

Before autonomous navigation, and even before automatic data analysis, the acquired data can be automatically cleaned. With our data cleaning feature, EC-3D, it is possible to do this by applying a filter to clean sonar data in real time, during data acquisition in NaviPac.

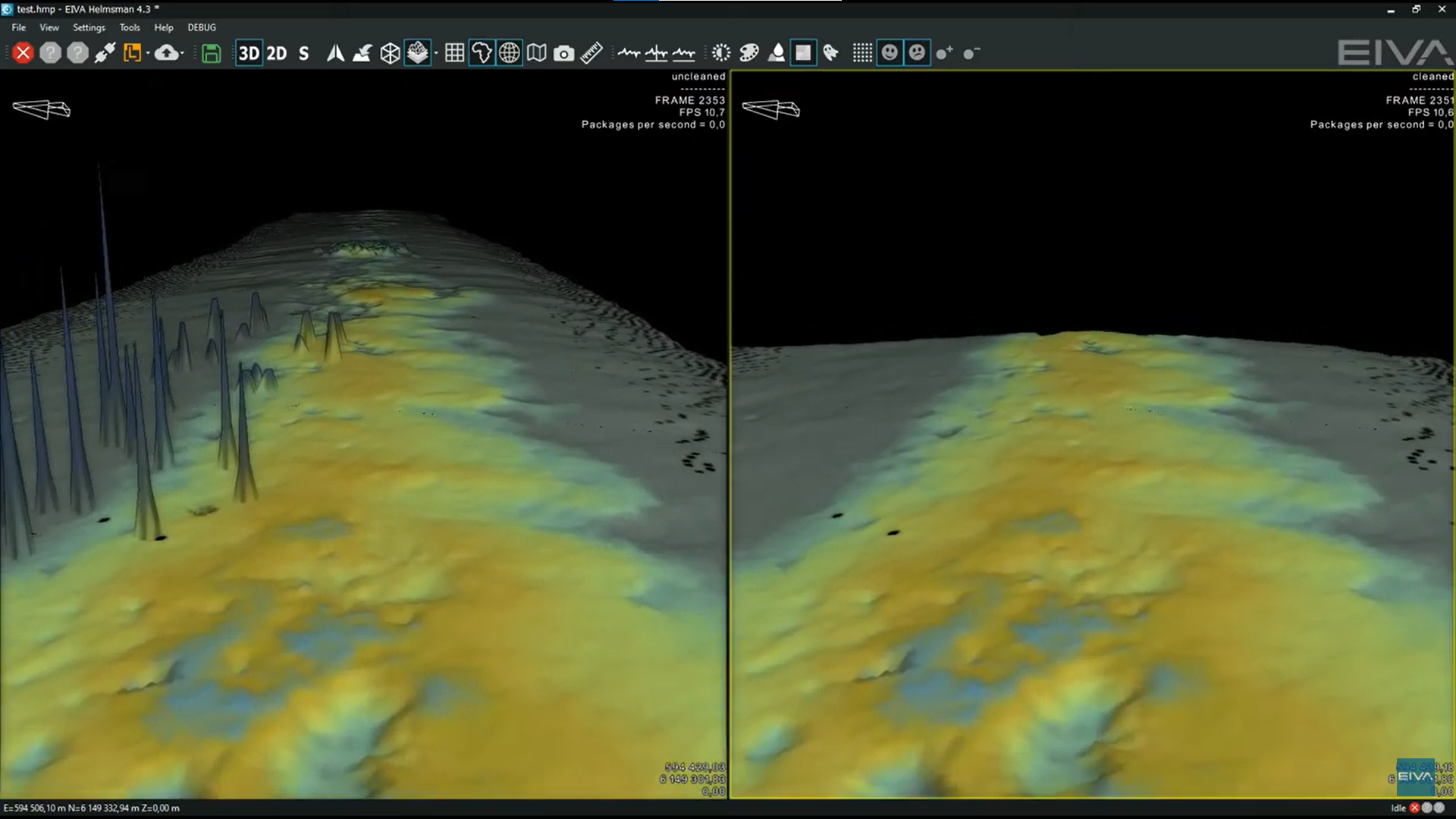

An unfiltered DTM (left) and an online cleaned DTM (right) displayed simultaneously in NaviPac Helmsman’s Display

EC-3D currently cleans data using a mix of cleaning methods, which can be combined manually to allow for precise automatic cleaning for a given setup. Each setup will generally have its own unique noise based on the environment and equipment, requiring this custom data cleaning. However, we aim to cut out this step with the help of machine learning. We are in the process of designing an even more automated data cleaning tool to add to the EC-3D family.

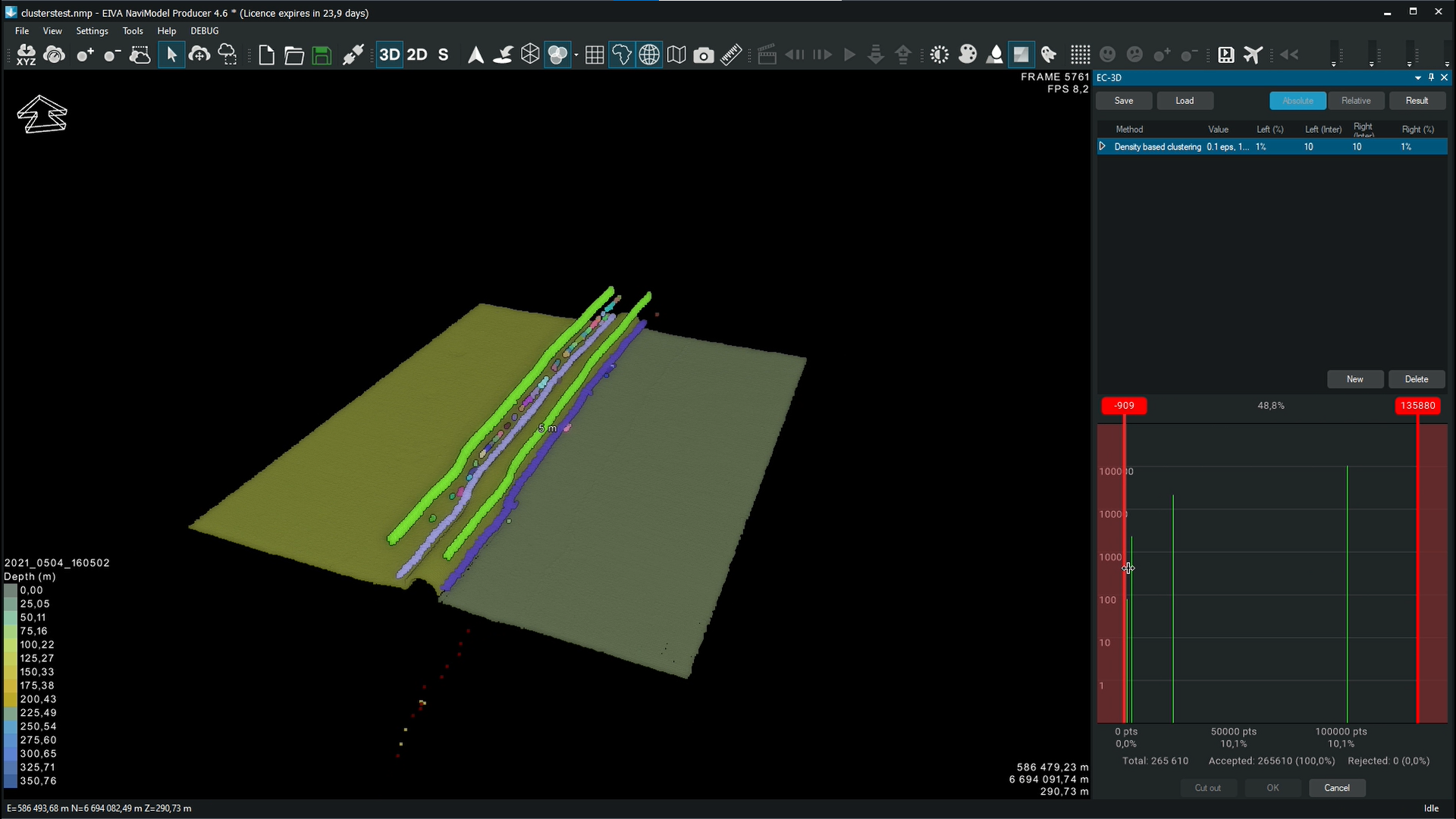

This upcoming data cleaning method uses the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm. This clustering algorithm divides data points into clusters based on how dense an area is. In other words, data points which are close to each other may be grouped in clusters. You can see in the example below how such clusters might look. While the seabed and pipe are grouped together, the noise around the pipe consists of many clusters, which can then all be grouped together for removal through easy instructions given in the EC-3D tool.

In this video you can see data cleaning performed with EC-3D using DBSCAN for a section of surveyed pipeline

Machine learning is helping us to optimise all steps in hydrographic operations, from navigation and acquisition to processing. NaviSuite supports hydrographic operations the whole way, as it is a single, complete software package for virtually any subsea task. We look forward to seeing the development of autonomous operations save NaviSuite users even more time and money.

If you want to get involved, we are more than happy to consider new projects or discuss current ones. We often need help beta-testing our solutions, so whether you are interested in trying out autonomous navigation or VSLAM computer vision, don’t hesitate to reach out. We are also thankful for any data, which can help us to help train NaviSuite Deep Learning. This could be of pipelines, corals, mussels – or maybe you have a whole new application in mind?

This article was written for and originally published in German hydrographic news issue 119, a special edition on using AI in hydrography.